In a previous chapter, we showed that multiplying the sums of elements of the vectors and computes the sum of the outer product terms, i.e. . We also showed that the outer product “contains” the inner product along its main diagonal.

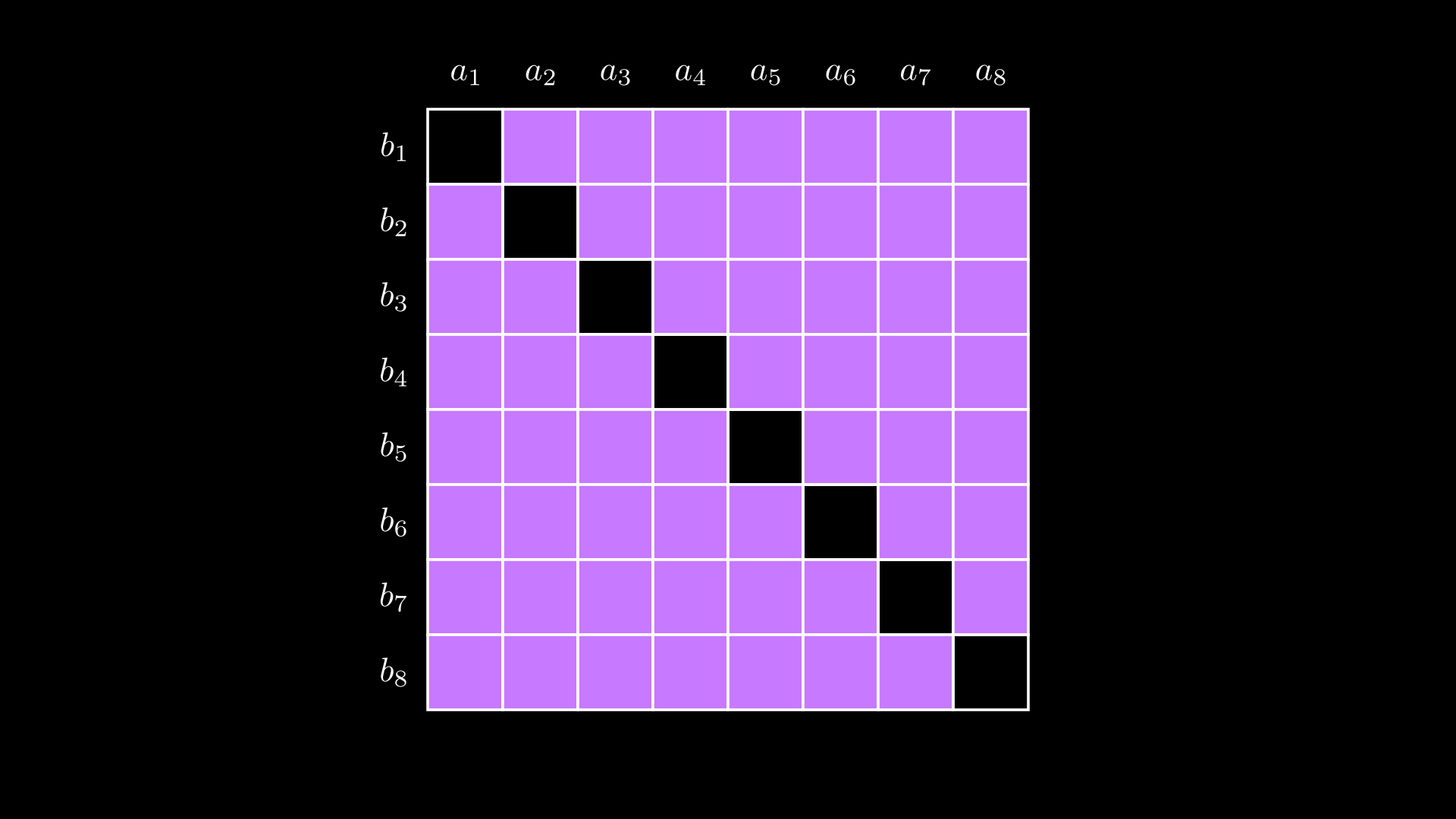

To “extract” the inner product , one must subtract from the outer product all terms that are not part of the inner product, i.e. the purple shaded region below:

There are such terms, so doing this directly is not efficient.

However, observe that we can “fill up the outer product” in the manner shown in the animation below:

In the animation above, after the prover sends the off-diagonal terms, the prover folds both and , reducing their length by half.

At the end, after we have folded and times, the size of the vectors is 1. When the outer product equals the inner product and we simply reveal both vectors, which will be of constant size.

Recap – and how this relates to the previous chapter’s algorithm

Previously, we showed how to prove we know the opening to a commitment while sending elements instead of . As a recap:

The prover commits the vector to as . The prover then sends the off-diagonal terms and . The verifier responds with and the prover folds the vector over as and sends to the verifier. Since is of length , we have cut the size of the data to be transmitted in half.

The verifier then checks that

The original intent of this algorithm was to prove that we know the commitment to by showing that we know the sum of the inner product and the off-diagonal terms and equals the sum of the elements of the outer products of the pairwise partitions of and .

If we recursively apply this algorithm, we get the algorithm described at the beginning of this chapter.

However, we could also interpret this procedure as us proving we know the opening to the commitment where with respect to the basis vector of length . We could naïvely prove we know the opening of by sending and the verifier checking that .

But rather than proving we know the opening to by sending , we can recursively apply the algorithm to prove we know the opening to by sending a vector of size . In fact, we can keep recursing until is of size .

The animation below provides an intuition of what is happening. The next section describes the animation in detail.

For this algorithm to work, the length of the vectors must be a power of two. However, if the length is not a power of two, we can pad the vectors with zeros until the length is a power of two.

Proving we know the opening to with data

The algorithm

The prover and verifier agree on a basis vector of length . The prover sends the verifier which is . The prover wishes to convince the verifier that they know the opening to while sending only logarithmic-sized data.

The prover and verifier then engage in the following algorithm below. The arguments after the | mean they are only known to the prover.

In the algorithm description below is the length of the vectors in the input, which are all of the same length.

\texttt{prove_commitments_log}(P, \mathbf{G}, | \mathbf{a})

Case 1:

- The prover sends and the verifier checks that and the algorithm ends.

Case 2:

- The prover computes and sends to the verifier where

- The verifier sends randomness

- The prover and verifier both compute:

- The prover computes

- \texttt{prove_commitments_log}(P', \mathbf{G}', \mathbf{a}')

Commentary on the algorithm

The prover is recursively proving that, given values and , they know the such that . Both parties recursively fold until it is a single point, and the prover recursively folds until it is a single point.

The prover transmits a constant amount of data on each iteration, and the recursion will run at most times, so the prover sends data.

We stress that this algorithm is not zero knowledge because in the case , the verifier learns the entire vector. The verifier could also send non-random values for to try to learn something about .

However, recall that our motivation for this algorithm is to reduce the size of the check , and and were not secret to begin with.

In fact, we have not shown how to prove we know the inner product with logarithmic-sized data, we have only shown that we know the opening to a commitment with a logarithmic-sized data. However, it is straightforward to update our algorithm to show we know the inner product of two vectors, as we will do later in this article.

Runtime

The verifier carries out the computation times, and the first takes time. At first glance, it seems that the verifier’s runtime is . However, notice that with each iteration, is halved, resulting in a runtime or , leading to an overall runtime for the verifier of .

Proving we know the inner product

We now adjust the algorithm above to prove that we conducted the inner product between two scalar vectors, as opposed to a vector of field elements and a vector of elliptic curve points.

Specifically, we must prove that holds a commitment to the inner product . This inner product is a scalar, so we do a normal Pedersen commitment instead of a vector commitment. For this we use a random elliptic curve point (with unknown discrete log) . Thus, .

However, we cannot simply re-use our previous algorithm because the prover can provide multiple openings to . For example, if and , the prover can open also open with vectors and .

To create a secure proof of knowledge of an inner product, the prover must also compute and send a commitment for and .

The naive solution is to run the commitment algorithm twice. The first two times are to prove and are properly committed to and and the third time is to show that was properly computed. In the next section, we show how to modify our algorithm to compute the inner product when both vectors are field elements.

Converting a scalar inner product to a scalar-point inner product

Let be the vector consisting of copies of the point , i.e.

Thus,

Note that is equal to .

That is, we can multiply each entry of by and take the inner product of that vector with and the result will be the same as . For example, if and , then .

From there, we could prove the following:

One proof instead of three

We can do better than sending three commitments and run the algorithm three times.

Because the points in , and have an unknown discrete log relationship, they can be combined as a single commitment .

We will slightly re-arrange the commitment as to make the next trick more obvious.

To prove this entire commitment at once, instead of three inner products, observe that

where means vector concatenation.

Effectively, we are proving we committed vector to elliptic curve vector basis .

In practice, we don’t actually concatenate the vectors because the total length would generally not be a power of two. Rather, we compute the , , and components separately, but compute and as if they were concatenated.

We show the algorithm in the animation below:

The algorithm

Given and commitment we wish to prove that is committed as claimed. That is, , , and are committed to and .

\texttt{prove_commitments_log}(P, \mathbf{G}, \mathbf{H},Q, |\mathbf{a}, \mathbf{b})

Case 1:

- The prover sends and the verifier checks that . The algorithm ends.

Case 2:

- The prover computes and sends to the verifier which are simply the off-diagonal terms of all the vectors concatenated together (see the animation above):

- The verifier sends randomness .

- The prover and verifier both compute:

- The prover computes:

- \texttt{prove_commitments_log}(P', G', H', \mathbf{a}', \mathbf{b}')

The following exercises can be found in our ZK Bulletproofs GitHub Repo:

Exercise 1: Fill in the missing code below to implement the algorithm to prove that is committed to to produce point :

from py_ecc.bn128 import G1, multiply, add, FQ, eq, Z1

from py_ecc.bn128 import curve_order as p

import numpy as np

from functools import reduce

import random

def random_element():

return random.randint(0, p)

def add_points(*points):

return reduce(add, points, Z1)

# if points = G1, G2, G3, G4 and scalars = a,b,c,d vector_commit returns

# aG1 + bG2 + cG3 + dG4

def vector_commit(points, scalars):

return reduce(add, [multiply(P, i) for P, i in zip(points, scalars)], Z1)

# these EC points have unknown discrete logs:

G_vec = [(FQ(6286155310766333871795042970372566906087502116590250812133967451320632869759), FQ(2167390362195738854837661032213065766665495464946848931705307210578191331138)),

(FQ(6981010364086016896956769942642952706715308592529989685498391604818592148727), FQ(8391728260743032188974275148610213338920590040698592463908691408719331517047)),

(FQ(15884001095869889564203381122824453959747209506336645297496580404216889561240), FQ(14397810633193722880623034635043699457129665948506123809325193598213289127838)),

(FQ(6756792584920245352684519836070422133746350830019496743562729072905353421352), FQ(3439606165356845334365677247963536173939840949797525638557303009070611741415))]

# return a folded vector of length n/2 for scalars

def fold(scalar_vec, u):

pass

# return a folded vector of length n/2 for points

def fold_points(point_vec, u):

pass

# return (L, R)

def compute_secondary_diagonal(G_vec, a):

pass

a = [4,2,42,420]

P = vector_commit(G_vec, a)

L1, R1 = compute_secondary_diagonal(G_vec, a)

u1 = random_element()

aprime = fold(a, u1)

Gprime = fold_points(G_vec, pow(u1, -1, p))

L2, R2 = compute_secondary_diagonal(Gprime, aprime)

u2 = random_element()

aprimeprime = fold(aprime, u2)

Gprimeprime = fold_points(Gprime, pow(u2, -1, p))

assert len(Gprimeprime) == 1 and len(aprimeprime) == 1, "final vector must be len 1"

assert eq(vector_commit(Gprimeprime, aprimeprime), add_points(multiply(L2, pow(u2, 2, p)), multiply(L1, pow(u1, 2, p)), P, multiply(R1, pow(u1, -2, p)), multiply(R2, pow(u2, -2, p)))), "invalid proof"

Exercise 2: Modify the code above to implement the algorithm that proves holds a commitment to , and , and that . Use the following basis vector for and EC point :

H = [(FQ(13728162449721098615672844430261112538072166300311022796820929618959450231493), FQ(12153831869428634344429877091952509453770659237731690203490954547715195222919)),

(FQ(17471368056527239558513938898018115153923978020864896155502359766132274520000), FQ(4119036649831316606545646423655922855925839689145200049841234351186746829602)),

(FQ(8730867317615040501447514540731627986093652356953339319572790273814347116534), FQ(14893717982647482203420298569283769907955720318948910457352917488298566832491)),

(FQ(419294495583131907906527833396935901898733653748716080944177732964425683442), FQ(14467906227467164575975695599962977164932514254303603096093942297417329342836))]

Q = (FQ(11573005146564785208103371178835230411907837176583832948426162169859927052980), FQ(895714868375763218941449355207566659176623507506487912740163487331762446439))

This tutorial is part of a series on Bulletproof ZKP.